Real-time robot commands in Sky-Walker 4

Sky-Walker 4 & Lucki robot integration

We are currently exploring how service robotics might play a role within the Sky-Walker platform. This early testing involves the Lucki robot through the OrionStar Robot OpenAPI. It is not a feature announcement or a confirmed development path. Instead, it allows us to learn from practical experiments and better understand how predefined robotic actions could support future workflows.

At this stage, Sky-Walker sends predefined route instructions that the robot executes. The process is monitored manually. These tests do not include access to real-time location, telemetry, or sensor feedback. There is also no active plan for IP Matrix integration currently.

This approach helps us learn without building more complex layers before there is a clearly defined direction.

Even at this early stage, Sky-Walker enables us to experiment with real-time robot control, allowing movement tests, navigation trials, and workflow simulations inside controlled spaces. These experiments help us refine how commands can be managed and coordinated through Sky-Walker in future deployments.

Current limitations

Working with robots such as Lucki for real-time navigation, task execution, and sensor-driven operations is entirely possible today but only through the official OrionStar SDK. While the SDK provides access to movement commands, status information, and basic interaction features, there are important limitations when developing.

1. No native multi-robot management

The OrionStar SDK is built for controlling a single robot, not a fleet.

There is no built-in support for:

- multi-robot task scheduling

- shared navigation routes

- fleet-level supervision

This means every robot operates independently, making coordinated operations difficult.

2. Requires a custom integration layer

To use Lucki inside a larger application or workflow, you must build your own:

- middleware / API bridge

- communication layer

- orchestration logic

This works, but it’s extra effort and becomes harder to maintain as your system grows.

3. Updates can break your integration

OrionStar frequently updates its firmware and SDK.

Since you are building your own integration on top of their driver:

- any SDK change

- protocol adjustment

- behavior modification

It can require fixes on your end. This adds ongoing maintenance responsibilities.

4. Extra complexity for navigation and task logic

The robot can move, avoid obstacles, and report status, but you must also manage:

- global path planning

- route optimization

- priority/task switching

- coordination with external systems

- fallback behaviors and error recovery

These behaviors directly impact how consistently autonomous tasks perform at scale.

5. Limited data aggregation and insight

Each robot logs its own data locally.

There is no native support for:

- cross-robot metrics

- performance analytics

- real-time dashboards

- unified reporting

As a result, long-term optimization becomes difficult without building your own data pipeline.

In short, Sky-Walker transforms robots from devices that execute predefined routes into fully coordinated, highly controllable actors inside a larger automation ecosystem.

This expanded control not only overcomes the limitations of preset commands but also opens the door to testing new behaviors, refining navigation, and building richer, more adaptive robotic experiences.

How our system connects to the Lucki Bot using the OrionStar Driver

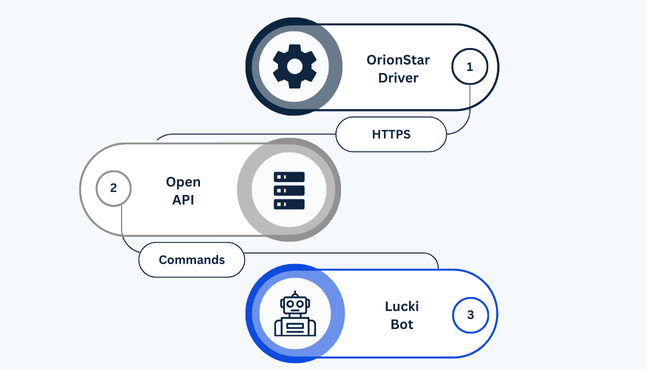

The image below shows the communication flow that powers our current integration with the Lucki Bot platform. This setup allows our system to reliably send commands to the robot while maintaining secure, structured communication through the OrionStar ecosystem.

Because we are still refining our robotics integration layer, this workflow provides a clean and testable setup for evaluating command delivery, API stability, and real-world responsiveness before introducing more advanced robotic behavior.

The process works in three simple stages:

OrionStar driver (HTTPS communication)

At the top of the workflow is the OrionStar Driver, which acts as the official interface for securely communicating with OrionStar-powered robots. Through HTTPS, the driver exposes the functionality needed to interact with the robot, ensuring data integrity and encrypted communication throughout all exchanges.

Open API layer (command handling)

Sitting at the center of the flow is our Open API implementation. This layer receives requests from the OrionStar Driver and structures them into standardized commands. It acts as the bridge between our own logic and the robot’s capabilities, ensuring that every instruction is validated, well-formatted, and delivered predictably.

Lucki Bot (execution on the robot)

Finally, the commands are sent to the Lucki Bot, where they are executed in real time. Whether directing movement, triggering interactions, or controlling robot behavior, the Lucki Bot responds according to the instructions passed down through the API and driver layers.

This architecture gives us a reliable and modular approach to robot communication. By clearly separating command creation, API handling, and robot execution, we’re able to test new robotic features safely and efficiently while preparing for more complex integrations in the future.

Robot types

Robots come in a wide range of shapes and capabilities, each designed for specific tasks and environments. From compact, nimble robots ideal for precision work in tight spaces to large, heavy-duty machines built for industrial or outdoor operations, understanding the different types of robots is essential for choosing the right one for any task. Some models, like humanoid robots, excel at interacting with people, while specialized robotic arms focus on high-precision manufacturing. Other hybrid designs combine mobility and strength to handle complex operations in varied settings. In this section, we’ll explore the main categories of robots, their unique strengths, and the applications where they perform best.

Usage

🤖 Manufacturing & Industry: Robots streamline assembly lines, handle repetitive tasks, and perform precise manufacturing operations. Equipped with sensors and AI, they can detect defects early, reduce human error, and improve productivity while ensuring workplace safety.

🏗️ Construction & Infrastructure: Robots assist in bricklaying, concrete pouring, welding, and inspections of hard-to-reach areas. Autonomous or semi-autonomous machines improve efficiency, reduce accidents, and allow work to continue in hazardous environments.

🚓 Public Safety & Law Enforcement: Robots are used in bomb disposal, surveillance, and hazardous material handling. Equipped with cameras and sensors, they keep human operators safe while navigating dangerous situations, such as fires, chemical spills, or active crime scenes.

📦 Logistics & Delivery: Autonomous robots transport packages, groceries, and medical supplies in warehouses, offices, and urban areas. They optimize delivery routes, reduce human labor, and can operate in challenging environments, including crowded or remote locations.

⚡ Energy & Utilities: Robots inspect power lines, pipelines, wind turbines, and solar panels. They provide high-resolution data, reduce downtime, and eliminate the need for humans to perform risky inspections at height or in harsh conditions.

🌍 Environmental Protection & Research: Robots monitor forests, oceans, and wildlife. Underwater or aerial robotic systems track animal populations, collect climate data, and detect illegal activities like poaching or pollution, supporting conservation efforts worldwide.

🎬 Media & Entertainment: Robots like camera bots and animatronics enhance film production, live shows, and interactive exhibits. They enable creative shots, complex stunts, and immersive experiences that were previously difficult or dangerous to achieve.

🚑

Healthcare & Assistance: Medical robots assist in surgeries, patient monitoring, and rehabilitation. Delivery robots transport medicines and lab samples within hospitals, while companion robots provide support for elderly or disabled individuals, ensuring timely care and safety.

Robots in the media

Recently, Disney captured media attention with a remarkable new robotic creation, a walking, talking snow‑robot version of Olaf from Frozen. This animatronic character is more than just a robot; it’s a fusion of cutting‑edge robotics, AI, and beloved storytelling to bring an animated character into living reality.

Read more about it here: Gazeta Express+2The Walt Disney Company+2

Future of robots

The future of robots is being shaped by rapid advances in autonomy, sensing, and intelligent control systems. AI-powered navigation, computer vision, and SLAM (Simultaneous Localization and Mapping) will allow robots to operate with minimal human supervision, even in complex, cluttered, or unpredictable environments. Improvements in mobility, battery efficiency, and payload handling will expand their capabilities in manufacturing, logistics, inspections, healthcare, and service applications.

Robotic platforms like next-generation humanoids and mobile manipulators embody this new phase: combining long-range mobility, adaptable dexterity, and intelligent decision-making to support fully autonomous operations and data-driven workflows. As robots integrate with IoT networks, cloud computing, and enterprise automation systems, they will enable end-to-end inspection pipelines, smart-facility monitoring, and autonomous material movement.

Progress in safety standards, human–robot interaction protocols, and operational regulations will be critical. Once these frameworks mature, robots, including advanced platforms built for autonomy and collaboration, will evolve from specialized tools into core infrastructure for industrial automation, resilient supply chains, and next-generation urban services.

Conclusion

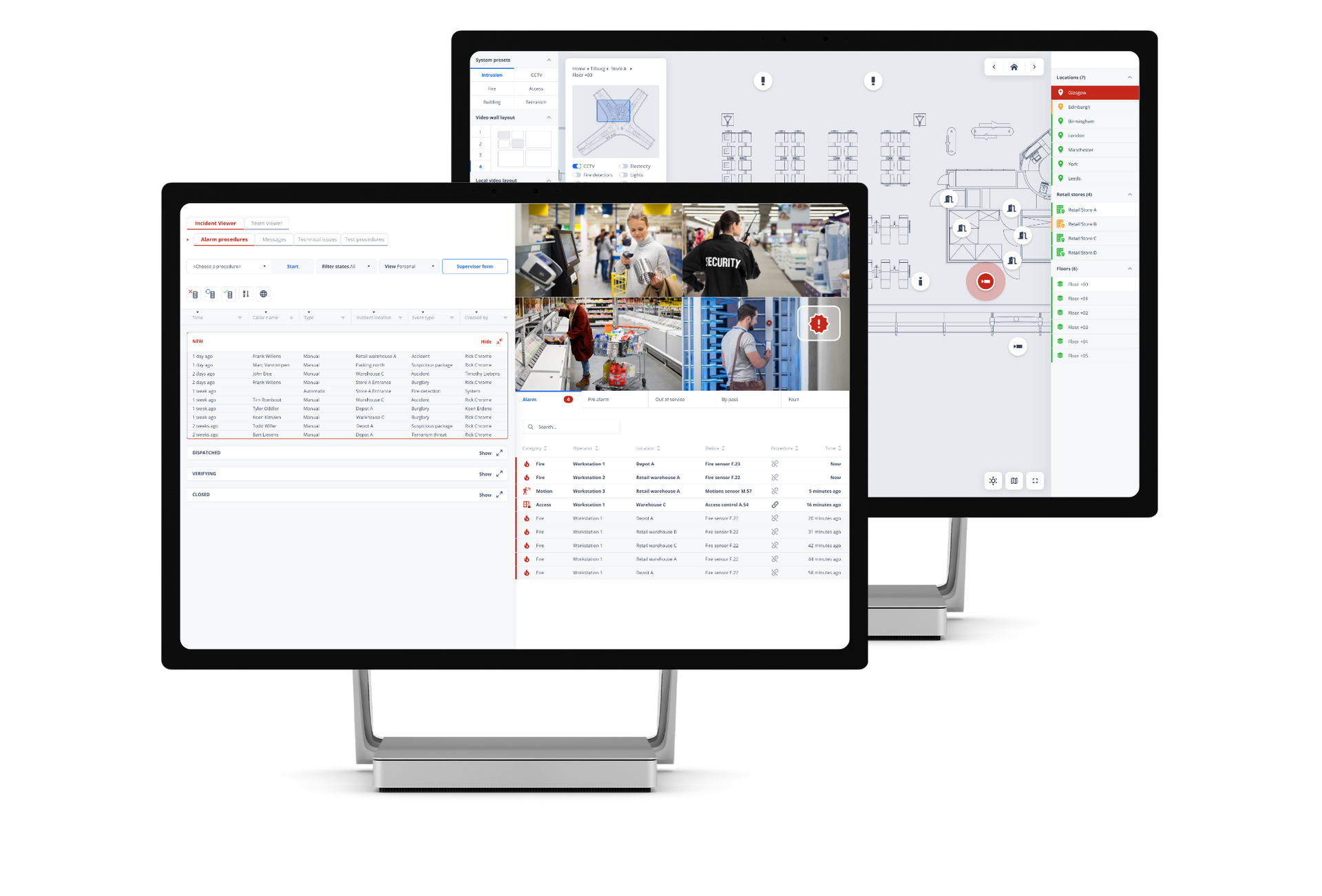

Robots are rapidly transforming from specialized machines into essential partners across a wide range of industries, delivering new levels of precision, automation, and operational efficiency. As organizations adopt more advanced robotic platforms, the ability to connect, manage, and coordinate these systems becomes increasingly vital.

Solutions like our robotic management platform bring all of this together by offering a unified, intelligent interface that links robots, orchestrates tasks, and streamlines real-time decision-making. With features such as autonomous navigation, sensor-driven insights, and multi-robot coordination, the future of robotic operations isn’t just on the horizon; it’s already unfolding.

As standards evolve and digital ecosystems grow, integrated platforms like ours will play a central role in unlocking the full potential of robotic technology.

Want to see Sky-Walker in action?

Download Product Ebook

Download Product Ebook View all our solutions

View all our solutions Sky-Walker Architecture

Sky-Walker Architecture View all our integrations

View all our integrations Book Protocol workshop

Book Protocol workshop Our Company

Our Company Contact Us

Contact Us View All Our Case Studies

View All Our Case Studies Become a PSIM Partner

Become a PSIM Partner Become a Sky-Walker PSIM partner today!

Become a Sky-Walker PSIM partner today! English

English Français

Français Nederlands

Nederlands